The first question we tackled was whether there was some code difference between the 3-d and 2-d registration models. We reduced the chances of this by refactoring the code base so that the 2-d and 3-d codes use the same codebase, with a minimum of if-statements guarding dimensionality specific code. This is easier to verify than the previous project of comparing jupyter notebooks for 2-D code and python scripts for 3-D registration. Unfortunately, it has not revealed any discrepancy- the previous notebook-script mess was correct.

The second question is what happens in a 3-D triangles and circles situation. We investigated this by training just registering the lung masks, which is a much simpler task. The network was able to align them, and the transformer and displacement predictors shared work like []

The third question is "is the retina registration transformer only able to capture complex transforms because it memorizes the train set?" We evaluate it on a held out test set and it captures the detailed transform, making this unlikely.

Huge breakthrough from lin: turning up the diffusion regularization in the split regularizer replicates the problem in 2-d. Turning down the diffusion regularization has no effect in 2d, other than slightly slower initialization (stays scrambled eggs longer). Trying now in 3d

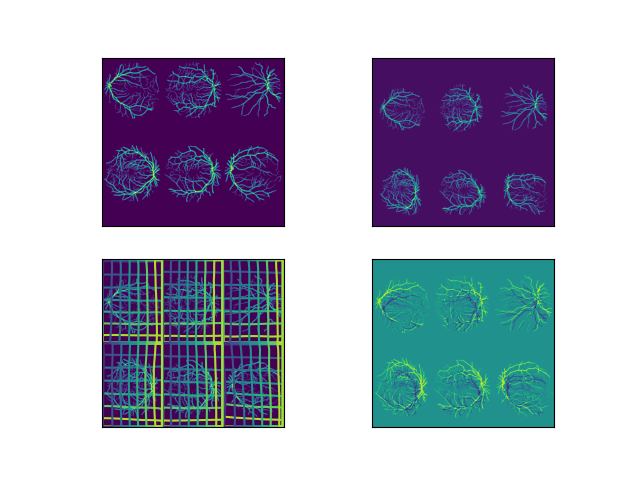

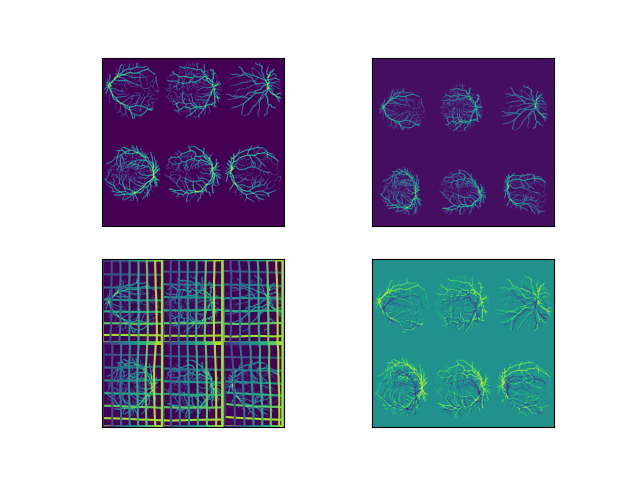

(figure of 2d result with over regularization)

whole network

just transformer

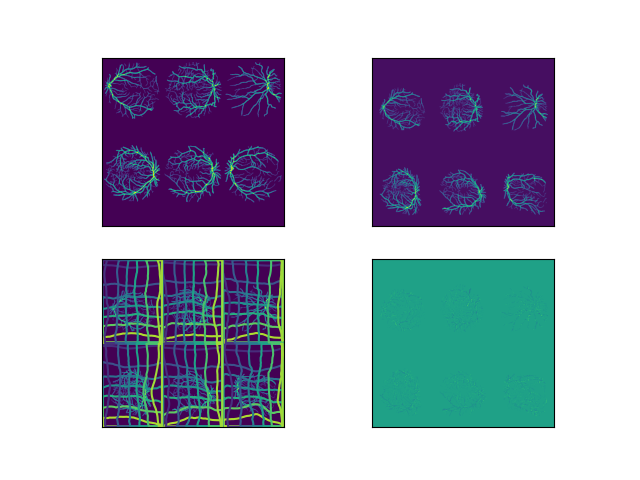

(figure of 2d result with under-regularization)

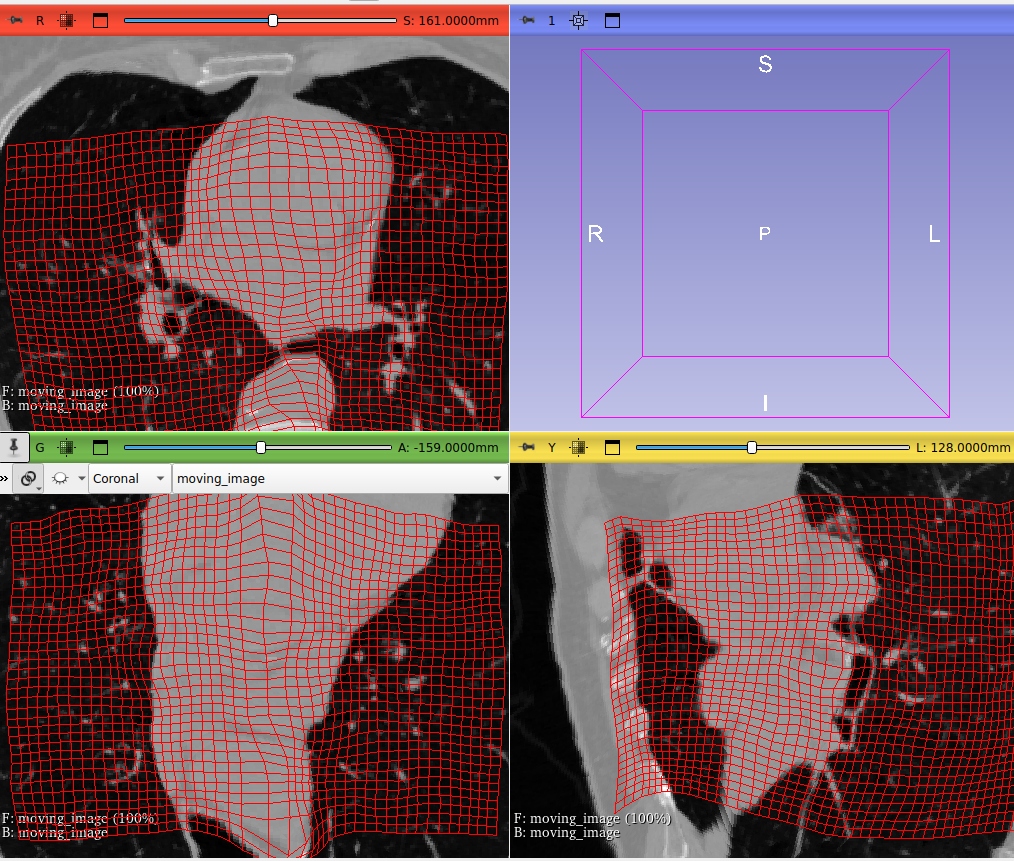

In 3d, turning down the diffusion regularization causes a moderate improvement in similarity, and more importantly successfully caused the transformer to begin doing its share of the work.

MTre results:

With diffusion_reg=0.02,

Full network error on copdgene1: 5.1

Just equivariant transformer: 5.5With diffusion_reg=1.5 (old setting)

Full network on copdgene1: 6.6

Just equivariant transformer: 12This is just with overnight training, we will see if training more or other hacks improves results further.

results at

/playpen-raid1/tgreer/equivariant_reg_2/evaluation_results/reg_.15-1/,

/playpen-raid1/tgreer/equivariant_reg_2/evaluation_results/reg_.15_just_equivar/3d just transformer

The improvement in mtre is modest (1.5 mm in in this setting with matched, abbreviated training) which is probably why this issue was not noticed earlier, but this is major progress in internal network behavior.

Back to Reports