Friday tasks

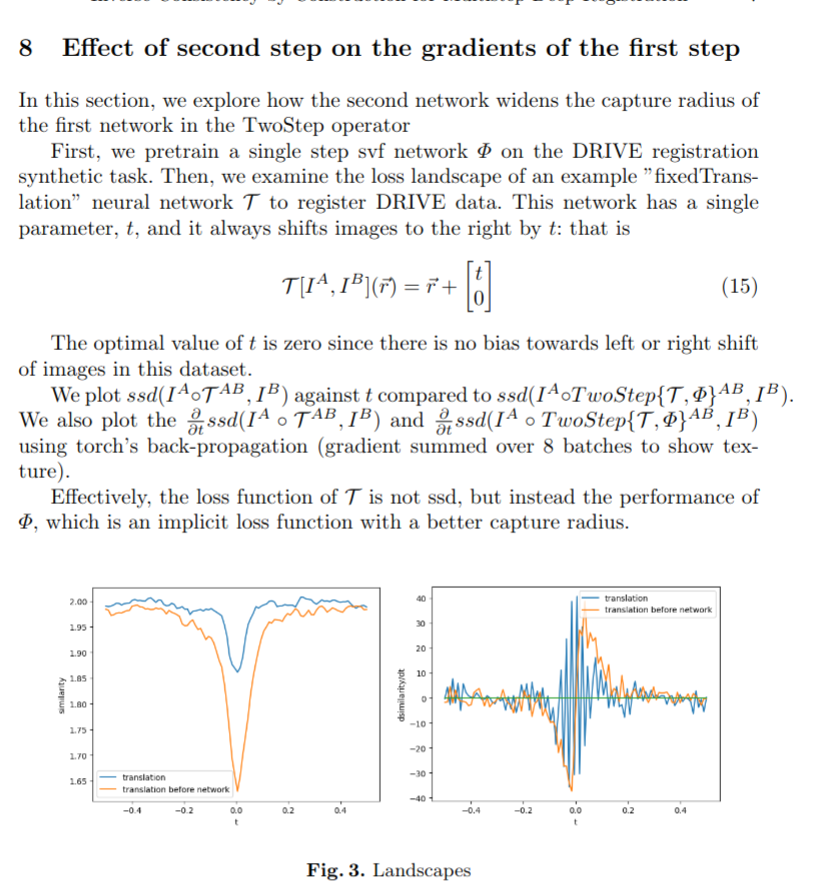

Investigate loss landscape induced by first network on second network by TwoStep

todo: extend this to TwoStepConsistent

Begin lung training

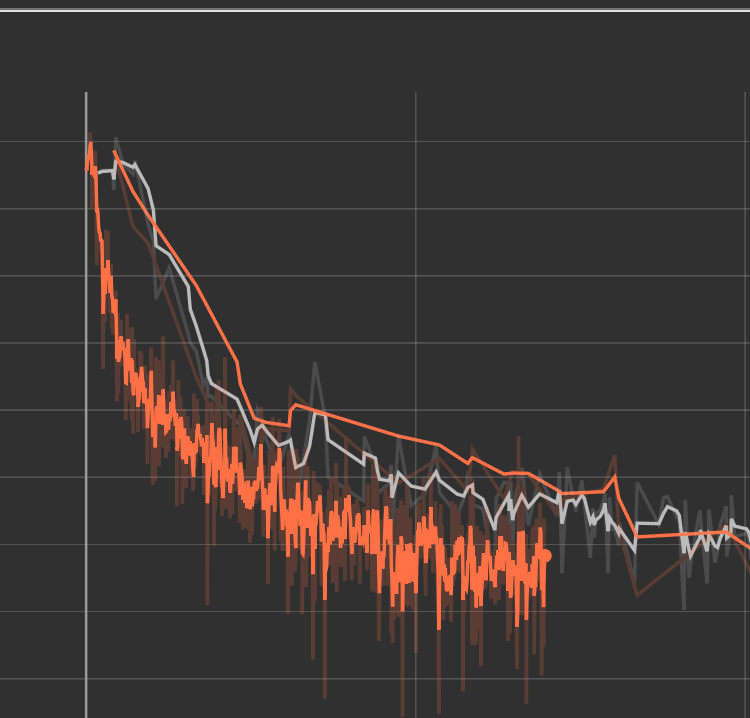

Preliminary real medical training. We train a 3-Step inverse consistent by construction network on the 1000-image lung dataset. So far it is training faster than GradICON, with no folds. I am using a bending energy regularizer and U-Nets for all three steps. I would also like to explore joint affine and deformable registration for this task. I am using the masked lungs, but am curious about performance on the unmasked lungs- especially if I switch to a total variation regularizer.

Next task: disentangle deformable from affine with weight penalty on deformable?

Saturday

Inverse consistent by construction affine registration- appears to again train faster than normal affine registration

Lung training failed to keep improving- plateued around 1.4 without opt, 1.1 with. This is good- better than GradICON without second step. Don't have VRAM budget for adding second step to Constricon as it is, so either need to -cut down ram or -find alternative to two step training.

Trying marc's suggestion of level dropout as an alternative to seconds step training- force every layer to attempt the full registration problem. So far slows down training.

Monday

Todo: Basic concepts research: get diffusion working on MLP MNIST

train affine lung

make table of lung results

affine + weight penalty deformable

Back to Reports